Selenium

Web scraping

Deepankara Reddy

Software Developer

Web Scraping With Selenium

How to perform web scraping using Selenium

Web scraping is an essential tool for any data scientist and machine learning practitioner. A common analogy is that data is the fuel for machine learning models. And because ML models are hungry for data, we need an efficient way to harvest all this information. While large sets of data are becoming more readily available for public use, the vast majority of information remains in the wild -- particularly, on websites themselves. This is where web scraping comes in. Manually going through a website and copying relevant information is time-consuming and impractical; instead, we automate the process. In the most basic sense, web scrapping involves sending (HTTP) requests to sites, and then parsing the responses. There are many tools and frameworks available for web scrapping, but in this tutorial, we'll utilize Selenium. Selenium is an automation tool, primarily used for end-to-end testing on browsers, but can be used for any sort of browser automation. What differentiates Selenium from other automation and web scrapping methods is its use of WebDriver to simulate the browser experience of users. When we run our script, Selenium will actually fire up a new browser window and run through all our commands, clicking on links and opening different pages in rapid succession. Selenium's API is easy to use; if you know some basic Python and CSS, then you can start scraping websites. (N.B. Selenium's API comes in several popular languages, not just Python.)

Suppose we created an online forum where users asked about software-related issues, in addition to wanting to build an ML system that identifies duplicate questions (and perhaps redirects the user to the duplicate). To do this, we'll need a lot of data -- i.e. the corpus of questions users have previously asked. For this hypothetical scenario, we'll scrape the website LinuxQuestions.org for user questions.

Installation:

To get started, let's install the necessary dependencies. We’ll create a new conda environment specifically for web scrapping projects and install the requisite packages. For this tutorial, we only need to install Selenium. We can do this in one line in Bash:

$ conda create -n scraping python=3.7 selenium

We just created a new environment called, "scraping," installed version 3.7 of the Python interpreter, and installed the Selenium package. Remember to activate our new environment:

$ conda activate scraping

As previously mentioned, Selenium utilizes what's called a WebDriver. This is not part of Selenium itself but rather developed by each individual browser (i.e. Chrome/Chromium has their Chrome driver, Firefox has their Geko driver, etc.). We need to download the corresponding WebDriver for the browser we want to automate. For this walk-through, we'll use Firefox's WebDriver. After downloading and extracting the archive, we get a file called “geckodriver”. We can place the file anywhere we want, but since we will be reusing the driver in other projects, we should choose a safe and sensible location. Since I'm working on a Linux machine, I decided to place the geckodriver file inside my /usr/local/bin/ folder.

So, we've downloaded our WebDriver, but we still haven't "installed" it yet. The Selenium documentation mentions a few different ways to install the drivers. We will do it the Linux way, and update the PATH Environment Variable -- it's quick and easy in Bash.

First, check to see if the driver's location already exists in our PATH:

$ echo $PATH

In fact, /usr/local/bin is already in my PATH, but if it weren't, we can add a new directory to PATH like so:

$ echo 'export PATH=$PATH:/path/to/driver' >> ~/.bash_profile

$ source ~/.bash_profile

We can test if the path has been correctly added by simply typing geckodriver in the terminal. You should get a message similar to this:

1643755824359 geckodriver INFO Listening on 127.0.0.1:4444

(Press ctrl+c to terminate)

Coding the logic:

With our virtual environment created and dependencies installed, we can begin programming. Open up your preferred IDE, create a new project, and create a new Python file scrape_linuxquestions.py.

First, we need to import Selenium:

from selenium import webdriver

We then start a new session, which is essentially initializing Selenium's WebDriver:

driver = webdriver.Firefox()

During this step, we can pass any options to our session. I'll pass the option to run Firefox in a private window -- so the above line will become

firefox_options = webdriver.FirefoxOptions()

firefox_options.add_argument("--private")

driver = webdriver.Firefox(options=firefox_options)

The driver instance will control how the browser behaves -- for example, navigating to a page, navigating back, selecting elements, clicking on elements, etc. Let's navigate to the pages we want to scrape. The LinuxQuestions.org forum is divided into several categories: hardware, software, server, security, etc. We just want to scrape the software questions, so we'll tell the driver to start on https://www.linuxquestions.org/questions/linux-software-2, scrape every question on the first page, then go to the second page of the software questions, then the next, and so on until we've scraped every question on every page. The primary way to navigate to a URL in Selenium is using the get() method, which accepts a URL string:

driver.get("https://www.linuxquestions.org/questions/linux-software-2")

Selecting elements:

Once we navigate to our desired URL, we want to then select various elements so that we can get the elements' content. Selenium provides a number of ways to select elements on a page, but the preferred method is to select elements by it's id or by a CSS selector. We'll first need to import the By class:

from selenium.webdriver.common.by import By

Open up your browser and go to linuxquestions.org/questions/linux-software-2, if you haven't already. Inspect the page in DevTools. You'll note that each page has 50 threads, and each thread title is an anchor tag. We want to grab the thread title as well as the href of the anchor tag. Once we have the href, we can navigate to that URL and scrape more details.

In Selenium, we can find an element object with .find_element() (or a list of elements with the .find_elements(). In the first parameter, we need to specify if we want to find the element by id, CSS selector, tag name, etc. For a CSS selector it will look like this:

my_element = driver.find_element(By.CSS_SELECTOR, ".mySelector")

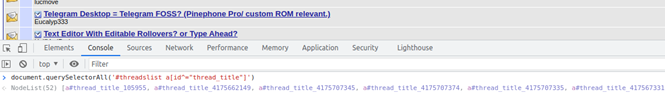

Note that if WebDriver can't find the element, it will throw an error (and therefore halt execution if not wrapped inside a try-except). To avoid this, and to ensure we are scrapping the correct data, we should try to the best of our ability to ensure we captured the intended elements. Open up the browser console; since the console accepts client-side JavaScript, we can test our CSS selectors here using document.queryselector() and document.querySelectorAll().

With a little bit of CSS trial-and-error, I figured out we can use the selector #threadslist a[id^="thread_title"] to query all the thread links. So, in our code, we would pass this selector as an argument in .find_elements():

threads = driver.find_elements(By.CSS_SELECTOR, '#threadslist a[id^="thread_title"]')

This will give us a list of WebElements, but there's a problem: even though we can see the anchor tags rendered on the page, and even though we have a good CSS selector, WebDriver probably won't be able to find the elements.

Waits:

If you're new to Selenium, you may be thinking that once we navigate to a page, we can start our executing scrapping logic. However, WebDriver does not track the active, real-time state of the DOM, which means that it doesn't know when a page is fully loaded but will continue executing the script nonetheless.

If you run our code in its current form, you will likely get an error from Selenium complaining that it can't find the elements you were looking for. Selenium provides a solution to this in the form of Waits. The eponymous Wait function tells the driver to wait for execution (and therefore wait on the page) for a fixed period of time or until a condition is met -- like finding an element on the page. So, in our code, once the driver gets the initial URL, we will tell it to wait until it finds a specific element -- let's say, the .pagenav element, since most of the page has loaded by the time the pagination buttons are rendered. Here's what that looks like -- first import the Wait class:

from selenium.webdriver.support.ui import WebDriverWait

Then we'll use the .until() method, passing in a function:

WebDriverWait(driver, timeout=5).until(lambda d: d.find_element(By.CSS_SELECTOR, '.pagenav'))

What this does is telling the driver to wait until it finds a .pagenav element on the page, and the timeout specifies that it will wait a maximum of 5 seconds to find that element, else Selenium will throw an exception. As a common practice, whenever we navigate to a new page, we will likely need to implement some sort of waiting function.

Iterating through links:

Now that we solved the page loading problem, let's return to our previous task: selecting and iterating through the list of links. To recapitulate, here's what our code currently looks like:

firefox_options = webdriver.FirefoxOptions()

firefox_options.add_argument("--private")

driver = webdriver.Firefox(options=firefox_options)

driver.get("https://www.linuxquestions.org/questions/linux-software-2")

WebDriverWait(driver, timeout=5).until(lambda d: d.find_element(By.CSS_SELECTOR, '.pagenav'))

threads = driver.find_elements(By.CSS_SELECTOR, '#threadslist a[id^="thread_title"]')

We said that we wanted to grab all the thread titles and hrefs. Since .find_elements() returns an iterable, we can just loop through the threads and map the properties we're looking for into a new list:

links = [(thread.get_attribute('href'), thread.text) for thread in threads]

With this list of links, we want to go to each URL to scrape the thread's details via for-loop. Note: we cannot do this with a list of WebElements (e.g. threads variable in our example). When you navigate to a new page, the previously selected elements become "stale" and can't be used again (Selenium throws a StaleElementReferenceException). So, it's necessary for us to map the WebElement list to a list of built-in types.

Here's what our loop looks like:

for thread_url, title in links:

driver.get(thread_url)

WebDriverWait(driver, timeout=5).until(lambda d: d.find_element(By.ID, 'posts')

)

Notice that we have a wait immediately after navigating to a new page. I inspected the DOM and found that the individual posts in the thread are contained in the #posts element, so we'll use that for our wait. After the wait, we can select the elements whose data we want to grab; specifically, we want to grab the description of the user's question (which is the first post in the thread) and the date of the question. As with before, we do this by inspecting the DOM and testing CSS selectors in the console. Here's what I came up with:

post = driver.find_element(By.CSS_SELECTOR, '#posts table[id^="post"]')

description = post.find_element(By.CSS_SELECTOR, 'div[id^="post_message"]').text

date = post.find_element(By.CSS_SELECTOR, '.thead').text

Note that when we select an element, we can use that element for sub-queries; that is, selected elements have access to the .find_element() and .find_elments() methods, not just the driver. We print all the data we've collected from this thread, and end the for-loop:

print("title:", title, "description:", description, "url:", thread_url, "date:", date)

print("=======================")

Finally, the driver.quit() statement will close the browser and end the WebDriver session. Here's what our complete code looks like:

firefox_options = webdriver.FirefoxOptions()

firefox_options.add_argument("--private")

driver = webdriver.Firefox(options=firefox_options)

driver.get('https://www.linuxquestions.org/questions/linux-software-2')

WebDriverWait(driver, timeout=5).until(lambda d: d.find_element(By.CSS_SELECTOR, '.pagenav'))

threads = driver.find_elements(By.CSS_SELECTOR, '#threadslist a[id^="thread_title"]')

links = [(thread.get_attribute('href'), thread.text) for thread in threads]

for thread_url, title in links:

driver.get(thread_url)

WebDriverWait(driver, timeout=5).until(

lambda d: d.find_element(By.ID, 'posts')

)

post = driver.find_element(By.CSS_SELECTOR, '#posts table[id^="post"]')

description = post.find_element(By.CSS_SELECTOR, 'div[id^="post_message"]').text

date = post.find_element(By.CSS_SELECTOR, '.thead').text

print("title:", title, "description:", description, "url:", thread_url, "date:", date)

print('=======================')

driver.back()

driver.quit()

Let's save our Python script and run it from the terminal:

$ python scrape_linuxquestions.py

Python should boot up Firefox and go through the threads on the page, printing the data we scraped in the terminal.

Iterating through all pages:

Let's refactor our program to scrape not just the first page, but all of the pages in the "software" section of the forums. We'll want to loop through all the pages of the section, and then have an inner loop for each thread on the page (the latter of which we've already done). In our code, after we start the WebDriver session and get the start URL, we'll begin our outer loop:

driver.get('https://www.linuxquestions.org/questions/linux-software-2/')

while True:

WebDriverWait(driver, timeout=5).until(

lambda d: d.find_element(By.CSS_SELECTOR, '.pagenav')

)

back_url = driver.current_url

Since the number of pages on the forum is unknown and increases every day, we'll loop indefinitely, and break when necessary. Note also from the snippet above that we get the current_url for each page -- since we hop from one thread to another without going back to the threads list page, we can't simply go back in the browser history. After we scraped all the threads on the first page, we navigate back using the current_url property:

driver.get('https://www.linuxquestions.org/questions/linux-software-2/')

while True:

WebDriverWait(driver, timeout=5).until(

lambda d: d.find_element(By.CSS_SELECTOR, '.pagenav')

)

back_url = driver.current_url

# get threads and map them like before:

threads = # ...

thread_links = # …

for thread_url, title in thread_links:

# same logic as before

# ...

# ...

# go back and wait:

driver.get(back_url)

WebDriverWait(driver, timeout=5).until(

lambda d: d.find_element(By.CSS_SELECTOR, '.pagenav')

)

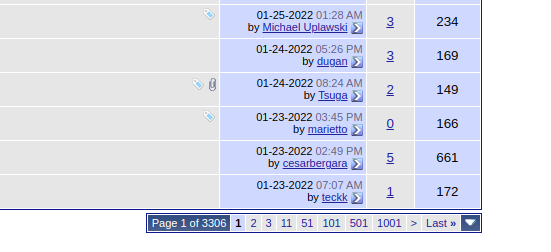

So, we've finished scraping the first page. As a human, to go to the next page, we could click the "next" pagination button, located near the bottom of the forum:

We'll do the same in Selenium. Let's query for the button, and if we find it, click it; if it doesn't exist, it means we reached the last page and can break out of the outer loop:

next_buttons = driver.find_elements(By.CSS_SELECTOR, '.pagenav a[rel="next"]')

if next_buttons:

next_buttons[0].click()

else:

break

Note that if we use find_element() and an element doesn't exist, WebDriver will throw an error, but if we use .find_elements() (plural) and the element doesn't exist, we get an empty list. Both methods are viable, but the first method, .find_element(), needs to be wrapped in a try-except.

Saving to file:

Our program now scrapes thousands of pages. We're almost done, but there's one more improvement we can make: right now, we're simply printing data onto the terminal. A more realistic scenario would be to save our data in a file or a database. For this example, we'll save our data to a CSV file. Afterward, we can load the CSV into a Pandas DataFrame and transform, analyze, or do anything else we want with it. We'll import Python's built-in csv module, then create two helper functions: one for creating the CSV file and adding a header row, and the other to append data to the file after completing each page:

def create_file():

with open('scraped_questions.csv', 'w') as file:

writer = csv.writer(file)

writer.writerow(['title', 'description', 'url', 'date'])

def append_to_file(rows):

with open('scraped_questions.csv', 'a') as file:

writer = csv.writer(file)

writer.writerows(rows)

Here’s what our complete, refactored script looks like (after some minor tweaks):

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

import csv

# ---------- constants: ----------

BASE_URL = 'https://www.linuxquestions.org/questions/linux-software-2/'

CSV_COL_NAMES = ['title', 'description', 'url', 'date']

FILE_NAME = 'scraped_questions.csv'

# ---------- helper functions: ----------

def create_file():

with open(FILE_NAME, 'w') as file:

writer = csv.writer(file)

writer.writerow(CSV_COL_NAMES)

def append_to_file(rows):

with open(FILE_NAME, 'a') as file:

writer = csv.writer(file)

writer.writerows(rows)

# ---------- main: ----------

if __name__ == '__main__':

# start session:

firefox_options = webdriver.FirefoxOptions()

firefox_options.add_argument("--private")

driver = webdriver.Firefox(options=firefox_options)

create_file()

# goto "overview" page (a listing of posts), then begin scraping logic:

driver.get(BASE_URL)

while True:

WebDriverWait(driver, timeout=5).until(

lambda d: d.find_element(By.CSS_SELECTOR, '.pagenav')

)

back_url = driver.current_url

# we find a list of forum threads:

# n.b. use of plural `.find_elements` rather than `find_element`:

threads = driver.find_elements(By.CSS_SELECTOR, '#threadslist a[id^="thread_title"]')

thread_links = [(thread.get_attribute('href'), thread.text) for thread in threads]

# scrape every thread on the page:

data = []

for thread_url, title in thread_links:

driver.get(thread_url)

WebDriverWait(driver, timeout=5).until(

lambda d: d.find_element(By.ID, 'posts')

)

post = driver.find_element(By.CSS_SELECTOR, '#posts table[id^="post"]')

description = post.find_element(By.CSS_SELECTOR, 'div[id^="post_message"]').text

date = post.find_element(By.CSS_SELECTOR, '.thead').text

data.append([title, description, thread_url, date])

append_to_file(data)

# go back to questions page:

driver.get(back_url)

WebDriverWait(driver, timeout=5).until(

lambda d: d.find_element(By.CSS_SELECTOR, '.pagenav')

)

# n.b. use of plural `.find_elements` -- the singular

# `.find_element` will throw an error if element not found:

next_buttons = driver.find_elements(By.CSS_SELECTOR, '.pagenav a[rel="next"]')

# if we can't find any "next" pagination buttons, then

# we reached the end of the threads; otherwise, go to next page:

if next_buttons:

next_buttons[0].click()

else:

break

print('--- finished scraping successfully ---')

driver.quit()

Final thoughts:

Selenium's API has a myriad of methods, helper functions, and browser actions; in fact, our mini project used only a small subset of the tools Selenium provides. Despite this, we were able to create a short script that scrapes thousands of pages and save that information in a CSV file. Hopefully, this walk-through offered some insights on how we can leverage Selenium to automate web scraping. Remember that anything you can do while browsing the web, you can have Selenium do on the browser as well.